Inter Rater Reliability

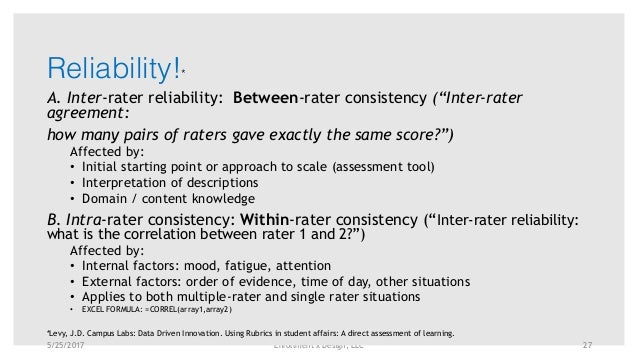

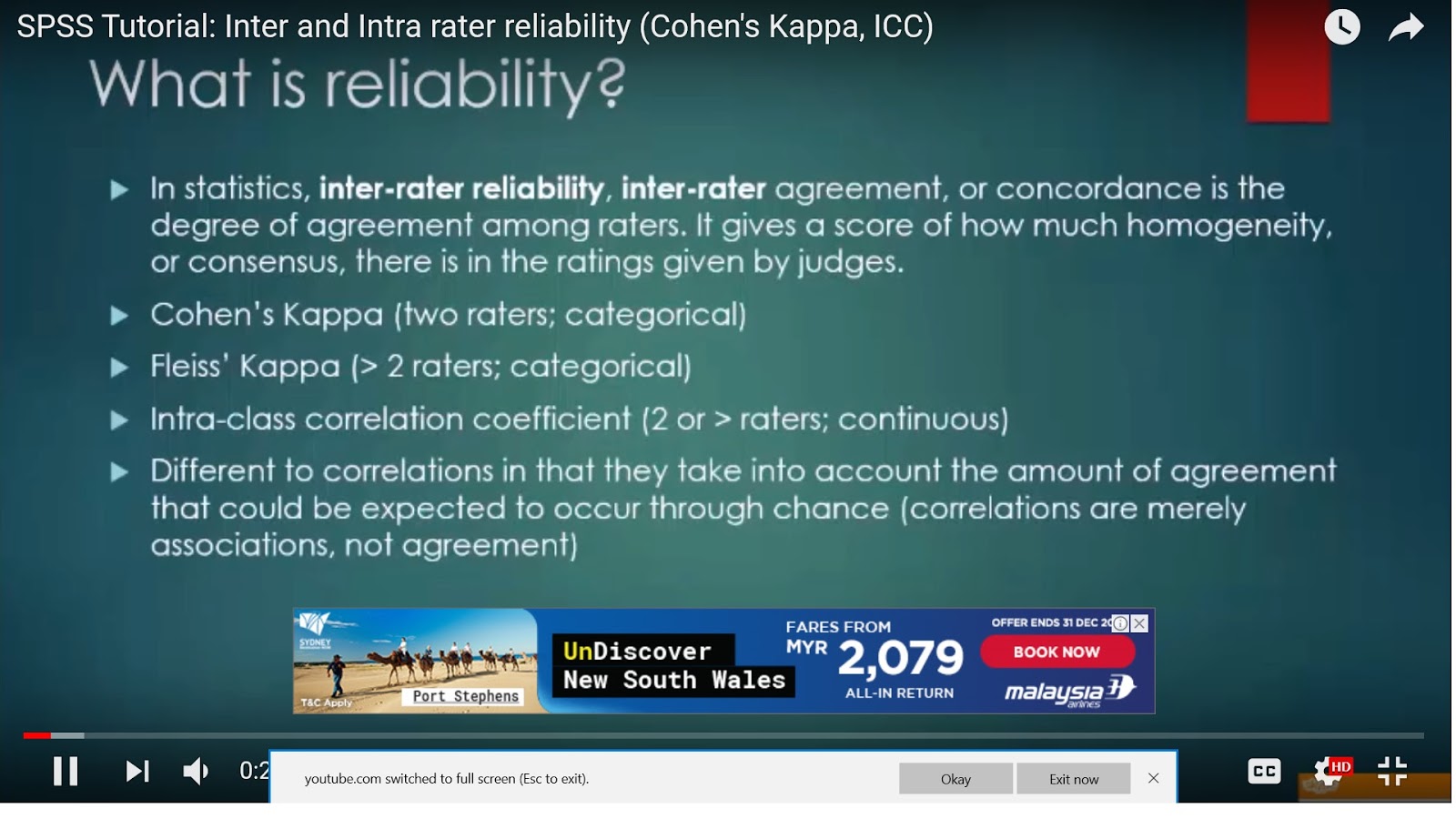

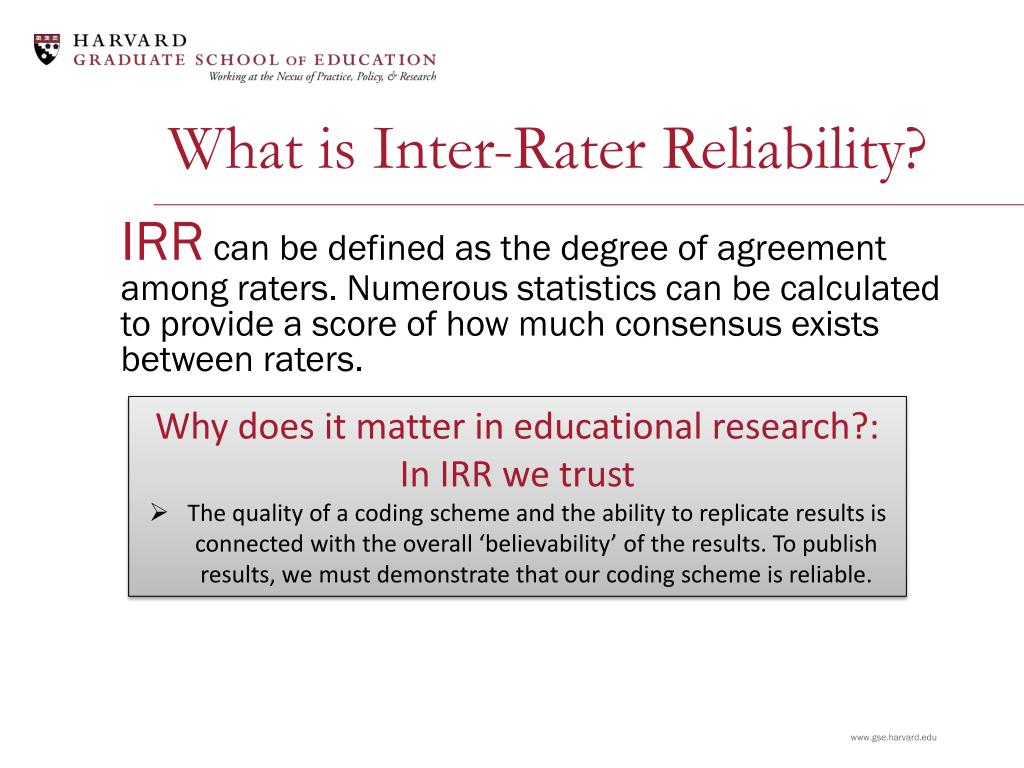

This refers to the degree to which different raters give consistent estimates of the same behavior.

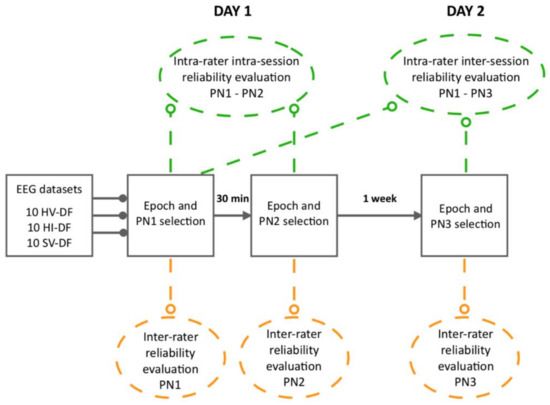

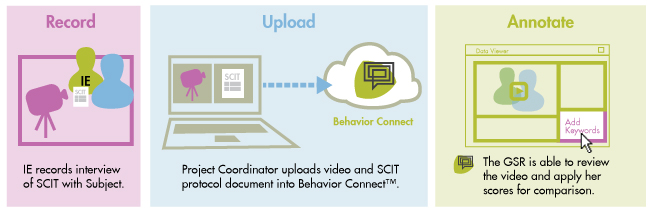

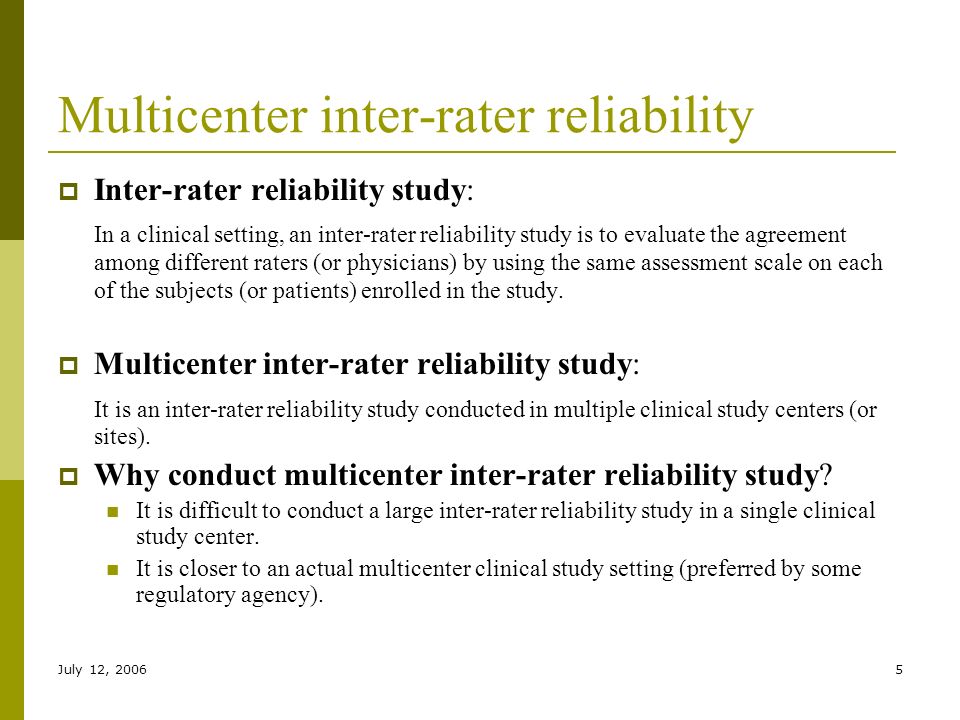

Inter rater reliability. Inter rater reliability irr within the scope of qualitative research is a measure of or conversation around the consistency or repeatability of how codes are applied to qualitative data by multiple coders william mk. However it requires multiple raters or observers. Inter rater reliability can be used for interviews. Trochim reliabilityin qualitative coding irr is measured primarily to assess the degree of consistency in how a code system is applied.

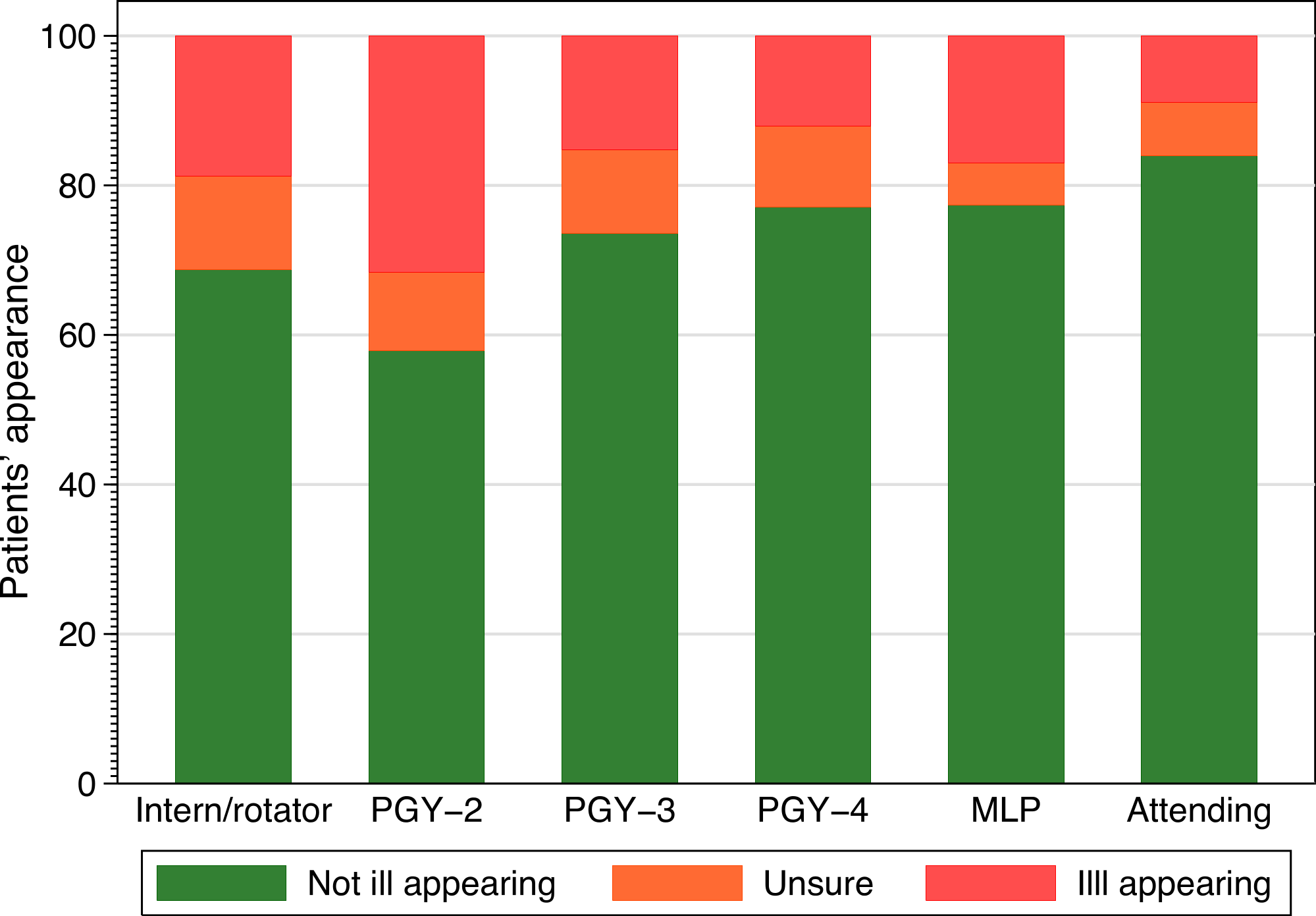

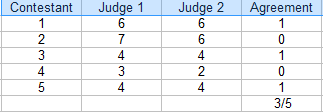

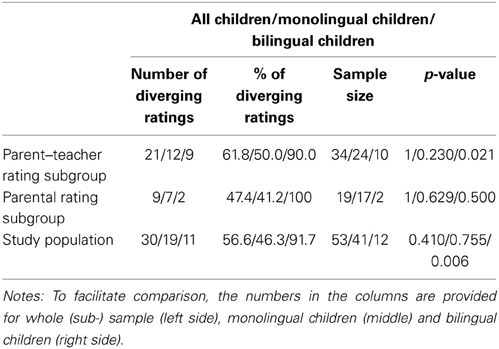

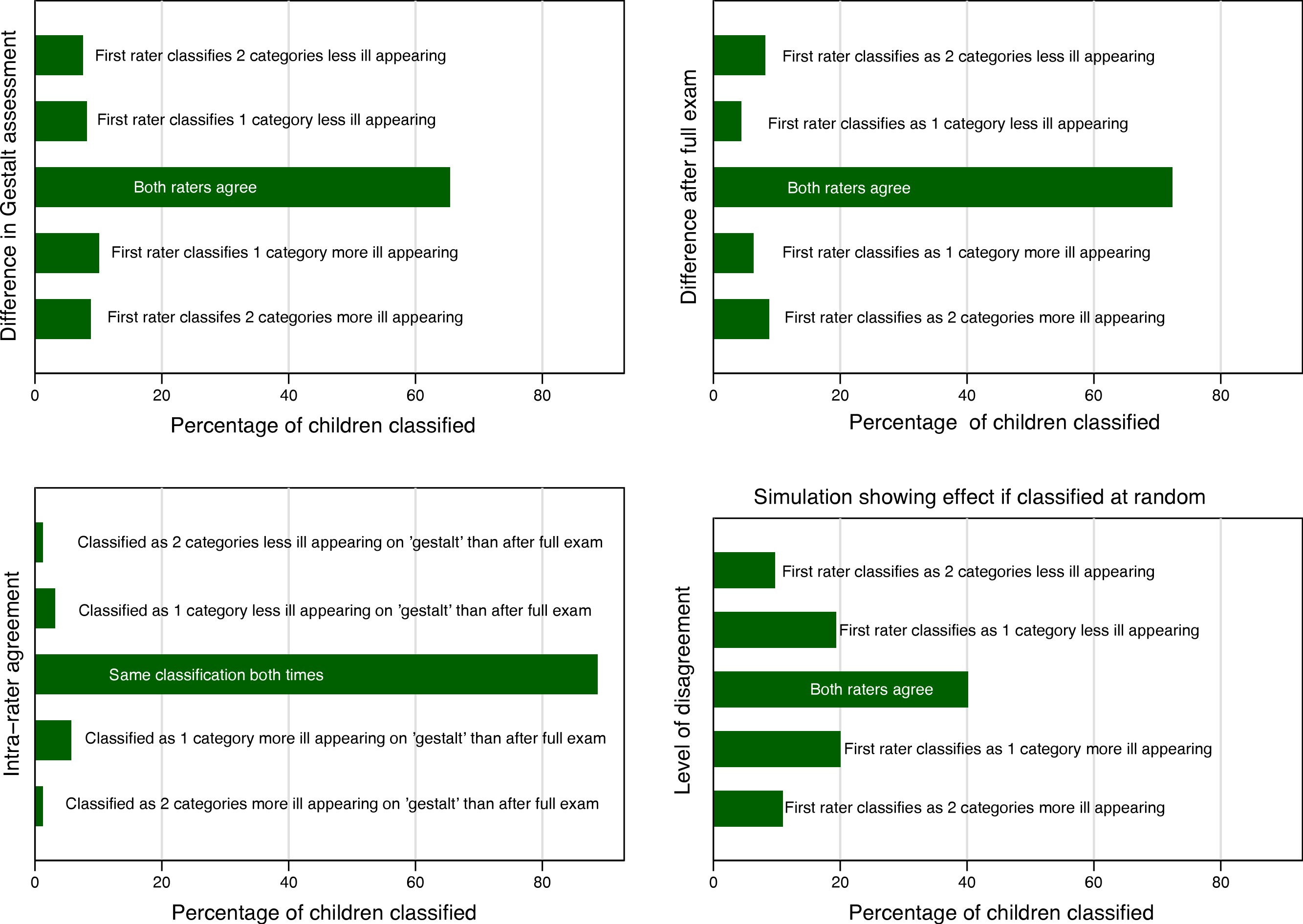

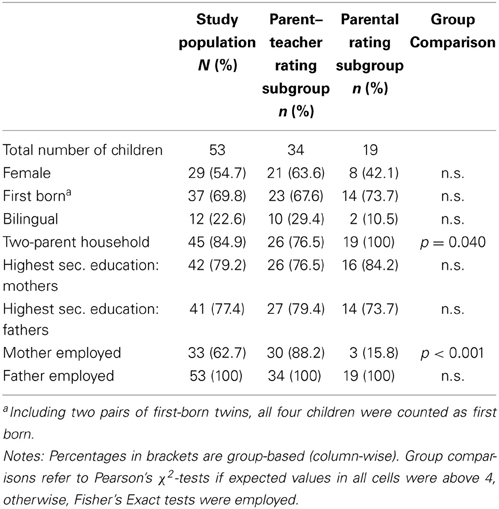

By reabstracting a sample of the same charts to determine accuracy we can project that information to the total cases abstracted. Due to infrequent diagnoses mixed diagnoses and the number of subthreshold protocols in this study kappas for individual diagnoses were not provided. An example using inter rater reliability would be a job performance assessment by office managers. If the employee being rated received a score of 9 a score of 10 being perfect from three managers and a score of 2 from another manager then inter rater reliability could be used to determine that something is wrong with the method of scoring.

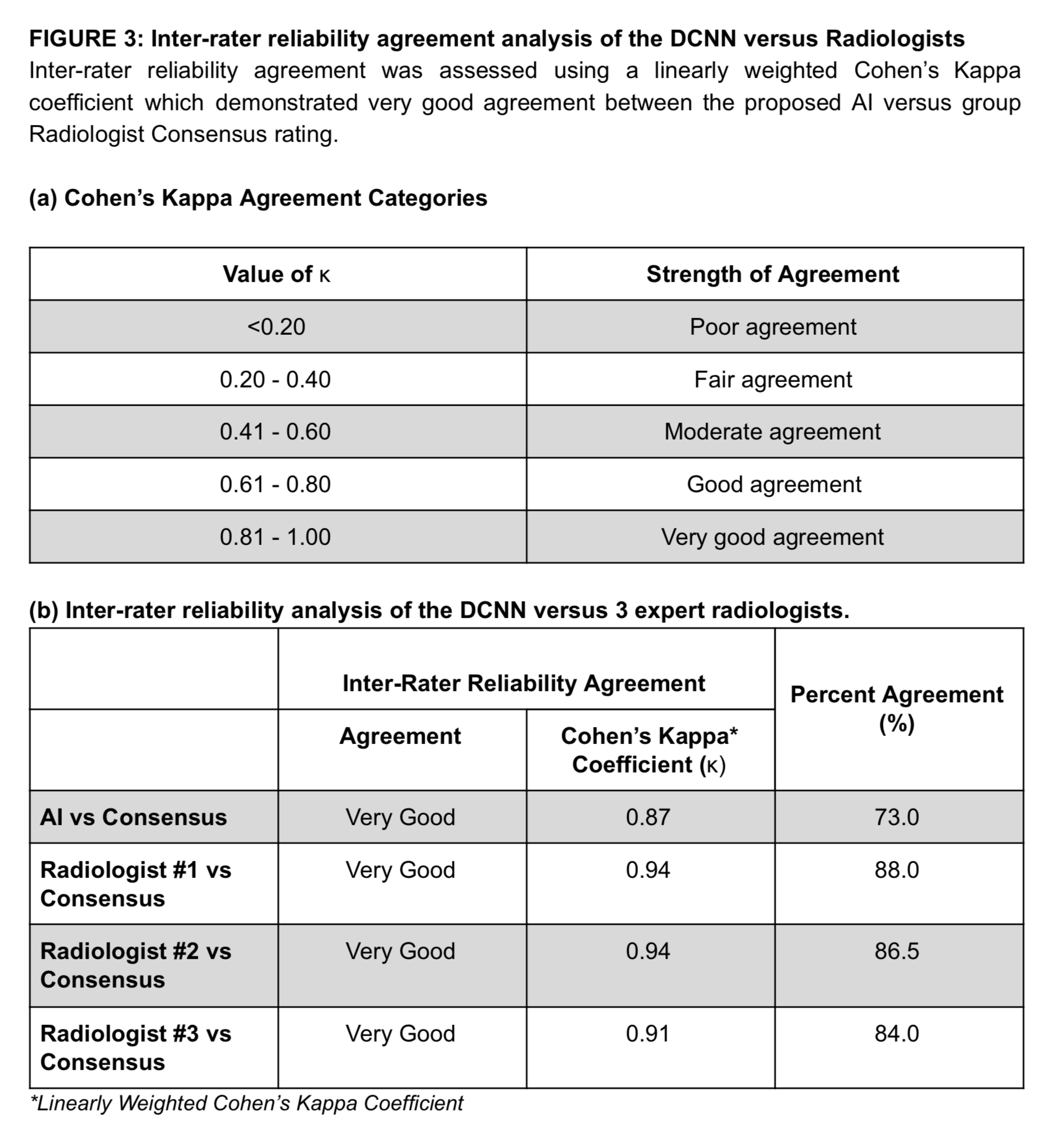

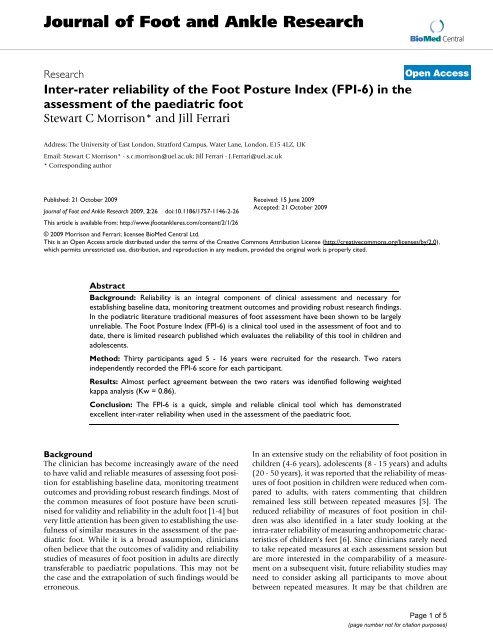

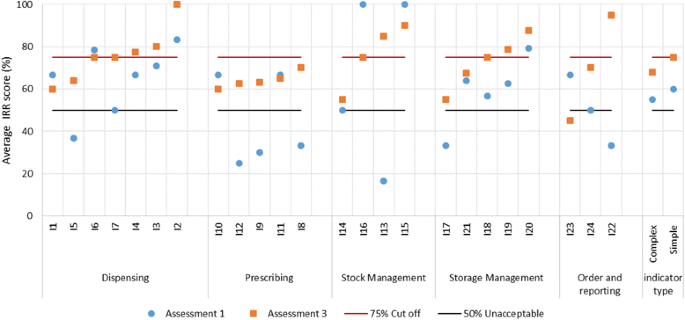

Inter rater reliability for presence or absence of any personality disorder with the sidp r was moderate with a kappa of 053. Inter rater reliability is one of the best ways to estimate reliability when your measure is an observation. Inter rater reliability irr is the process by which we determine how reliable a core measures or registry abstractors data entry is. In statistics inter rater reliability also called by various similar names such as inter rater agreement inter rater concordance inter observer reliability and so on is the degree of agreement among ratersit is a score of how much homogeneity or consensus exists in the ratings given by various judges.

Interrater reliability and the olympics. For example watching any sport using judges such as olympics ice skating or a dog show relies upon human observers maintaining a great degree of consistency between observers. As an alternative you could look at the correlation of ratings of the same single observer repeated on two different occasions. The test retest method assesses the external consistency of a test.

Spinalcord On Twitter Intra Rater And Inter Rater Reliability Of The Penn Spasm Frequency Scale In People With Chronic Traumatic Spinalcordinjury Read The Full Paper Https T Co Kijsm2hqwc Https T Co Dvl4iy08mq

Http Www Lrnglobal Org Research Public Evaluating 20inter Rater 20reliability 20in 20speaking 20assessments Pdf

%2C445%2C291%2C400%2C400%2Carial%2C12%2C4%2C0%2C0%2C5_SCLZZZZZZZ_.jpg)